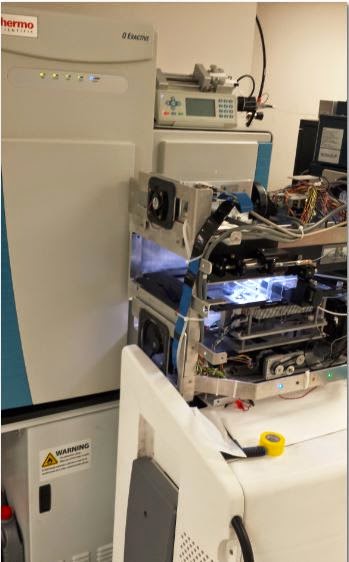

Boy o' boy! Intact analysis and top down proteomics are all the rage these days! A lot of this has to do with the Exactives. The Q Exactive is great for both. The QE Plus has the new Protein mode option and the Exactive plus EMR is probably the easiest and most sensitive instrument for intact analysis ever made. A large percentage of my day job these days is supporting you guys with intacts and top downs. A problem I've ran into is that the standards out there kind of suck.

My friend Aimee Rineas at Dr. Lisa Jones's lab at IUPUI took a swing at fixing this problem a while back

Our solution? A pretty thorough analysis of the 6(?? 7?? read on, lol!)

Mass Prep protein mixture from Waters. It is part number 186004900 . Be careful, there are several similar products and the Waters website doesn't do a very good job of distinguishing them. This is the one I'm talking about.

Great! So we have a standard. Easy, right? Not so fast. The chart above is all the information you get on the proteins. For Ribonuclease A, the mass is rounded to the nearest 100? Sure, this will be okay for some TOFs, cause thats about as close as you can get in the mass accuracy department, but I'm running Orbitraps. I want to see my mass accuracy in the parts per million, not the level of mass accuracy I will get with a TOF or SDS-PAGE.

This is where the work comes in. Aimee and I used a really short column. A C4 5 cm column and ran this standard out several times on the Q Exactive in her lab. We did this first to obtain the intact masses and a few more times for top down analysis.

Our best chromatography looked like this (this is an RSLCnano, we just ran the microflow with the loading pump:

Not bad for a 5 cm column, right?

Lets look at the first peak:

13681.2. This is our RiboNucleaseA. If we go to Uniprot and look up the sequence and cleave the first 25 amino acids (this is the pro- sequence that isn't actually part of the actual expressed protein...its a genetics thing that we don't have to worry about (?) in shotgun proteomics (?) but we have to worry about in intact and top down.

According to ExPasy, the theoretical mass is: 13681.32. This puts us 0.12 Da or 8.7 ppm off of theoretical. Boom! (On a QE Plus run since, I've actually tightened this awesome mass accuracy!)

Okay. I know there are some good TOFs out there. There are probably some that could get us within somewhere close to this mass. But if we dig a little deeper, we see the real power the Orbitrap has on this sample. Look at this below.

Can you see this? Sorry. Screen capture and blogger format only get me so far.

If we look closer at our pure protein we purchased, an inconvenient fact emerges. What we thought was our pure protein, we can see with the QE, most certainly is not. At the very least, we find that this protein is phosphorylated. This problem is exacerbated when you increase the sensitivity even further by analyzing this same sample with a QE Plus (I have limited data showing this mix actually has a 7th proteoform in it that I need to find further evaluate.

By the way, this protein is known to be phosphorylated in nature. The manufacturer just wasn't aware some of it slipped through their purification process. We also did top down, remember. We should be able to localize the modification. I just haven't got quite there yet. Free time is a little limited these days.

(I have used ProsightPC and localized this modification, I'll try to put it up later).

People doing intact analysis are sometimes critical of the "noise" they find in an Orbitrap. Further evaluation of the noise will often reveal that they are really minor impurities in our sample. Are they biologically relevant if a TOF can't see them and only a QE Plus can? I don't know. Probably not. Maybe? Wouldn't it be better to know that they are there in any case?

I started this side project months ago, considered actually writing up a short note on it and figured, "what the heck" more people will probably read it if I put it here anyway. I've also gotten to run this sample on a QE Plus, which revealed even more cool stuff.

This is incomplete, and not doublechecked, but these are the masses that I have so far for this standard:

| Protein | Part list mass | Our mass |

| RibonulceasA bovine | 13700 | 13680.9 |

| | 13779.3 |

| Cytochrome C, horse | 12384 | 12358.2 |

| Albumin, bovine | 66430 | 66426.7 |

| Myoglobin horse | 16950 | 16950.5 |

| Myoglobin horse + heme | 17600 | |

| Enolase, yeast | 46671 | 46668.8 |

| Phosphorylase B | 97200 | 97129 |

P.S. All of this data was processed with ProMass. I, too, am a creature of habit. Protein Deconvolution gives me tons more tools and better data but for a super simple deconvolution I still default to good old ProMass or MagTran. If I had written this up and tried to submit it somewhere, you bet your peppy I'd take screenshots from my PDecon runs though!